Most research around the state of the AI industry talks about how the majority of initiatives are still immature and models rarely make it to production. To discover whether these pervasive ideas are still gospel in 2021, Run:AI commissioned a survey of more than 200 industry pros from 10 countries, including data scientists, MLOps/IT practitioners and system architects. Collected over the third quarter of 2021, the survey responses came primarily from experts at large enterprise companies. Respondents opened up about the technologies they use, the challenges they face with AI and the size of not only their AI budget, but also their confidence in bringing AI into production.

The resulting report shows an industry that despite being in the early stages of maturity, holds an exciting amount of potential. Three-quarters of those surveyed are looking to expand their AI infrastructure, and 38% have more than $1 million in annual budget to make that happen. In an effort to innovate and outpace the competition, companies are putting considerable investment into AI initiatives despite the fact that many still face early-stage hurdles with AI infrastructure setup, data preparation, and even goal-setting.

The survey data brings to light fascinating commonalities among companies of all sizes, and at times, juxtapositions emerge between the ambitious corporate vision of AI in production, and the day-to-day reality of scaling AI workflows. Here are a few key findings:

AI Is a Cloud-Native World

AI was clearly born with the cloud in mind, with 81% of companies using containers and cloud technologies for their AI workloads.

Fig: Use of Containers for AI Workloads

Along with containers comes adoption of Kubernetes and other cloud-native tools for container management. A sizable 42% of respondents are already on Kubernetes, another 13% on OpenShift, and 2% on Rancher /SUSE. These numbers are considerably higher than container adoption for non-AI workloads, making AI a leader in cloud-native adoption.

Big Spenders, But a Lack of Confidence

The survey shows that 38% of companies have a budget of more than $1M per year for AI infrastructure alone, and 59% have more than $250k per year. These huge budgets should indicate high confidence among the companies surveyed that they can get AI models into production.

Fig: Annual AI Infrastructure Budget

However, for 77% of companies, less than half of models make it to production. Further, 88% of companies say that they are not fully confident in their AI infrastructure set-up and aren’t sure that they can move their models to production in the timeline and budget provided.

Infrastructure Challenges Weigh Heavily on AI Teams

Lack of confidence in AI infrastructure extends to hardware utilization, with more than 80% of surveyed companies not fully utilizing their GPU and AI hardware, and 83% of companies admitting to idle resources or only moderate utilization.

Fig: GPU and AI Hardware Utilization

Only 27% say that GPUs can be accessed on demand by their research teams as needed, with a sizable percentage of those who responded relying on manual requests for allocating compute resources.

Fig: Do Research Teams Have On-Demand Access to GPU Compute?

Budgets are Growing, Despite Challenges

AI challenges are relevant across all respondents, regardless of company size, industry, AI spend, or infrastructure location (cloud, hybrid, or on-premises). Infrastructure utilization is an issue for between 85%-90% of respondents, even among companies that have $10M or more budgeted for AI each year. Despite this, most companies are not limiting their budgets until their challenges are solved, with 74% planning to increase spend on AI infrastructure in the next year.

Plans to Increase Spend on GPU Capacity or Additional AI Infrastructure

Conclusion

There’s strong financial support backing AI projects, but it needs to be channelled to the right places, improving the systems used for AI infrastructure management, solving hardware utilization challenges, and supporting research teams in gaining both confidence and access to resources. To see the detailed analysis and all survey data, you can download the report here.

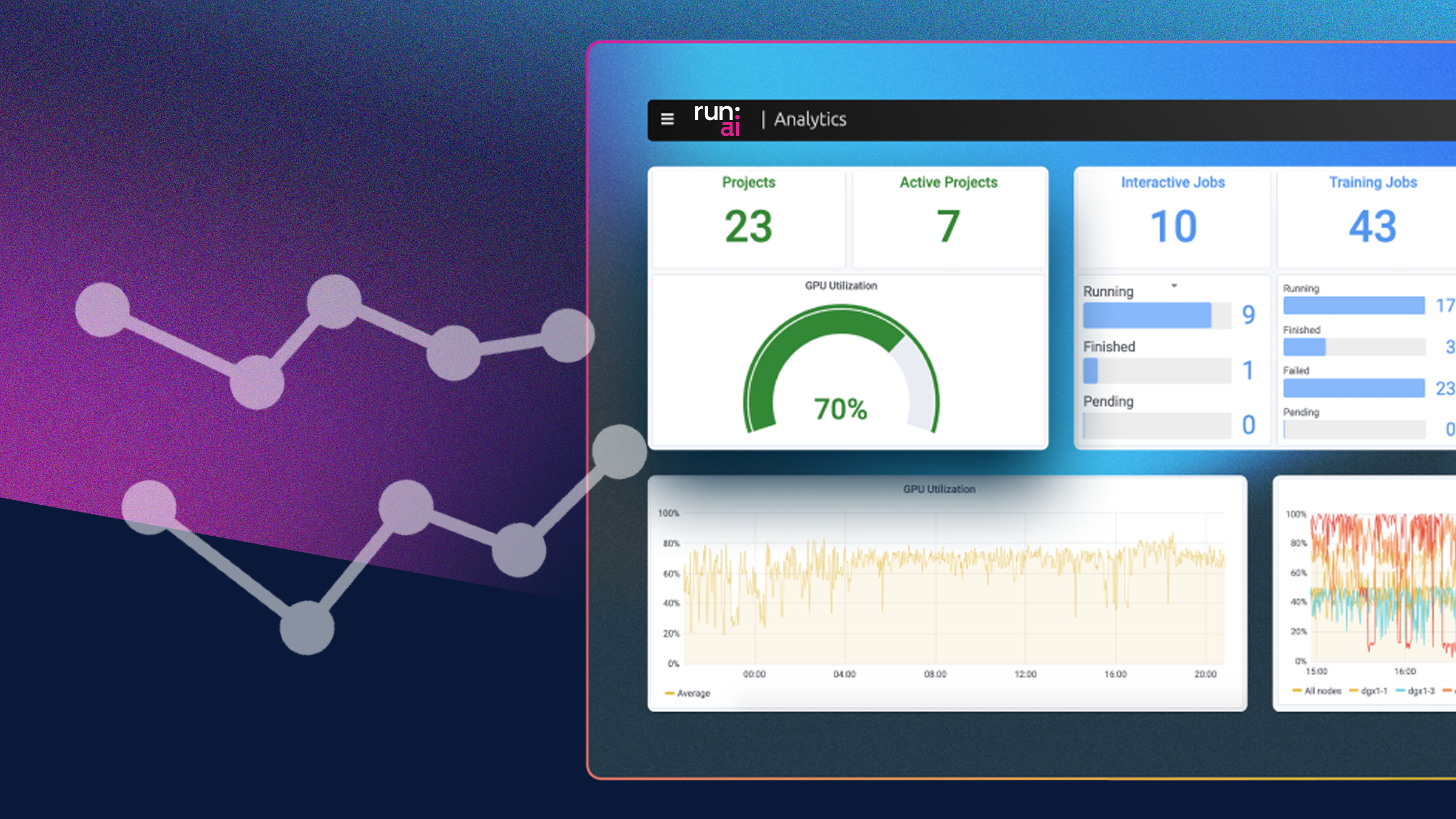

The survey data indicates that an organization’s ability to move ML models to production on time and on budget is largely dependent on effective allocation and high utilization of GPU resources. For enterprises seeking to solve infrastructure or compute challenges, Run:AI provides a compute resource management platform built specifically for AI workloads.

With Run:AI, data scientists get access to all the pooled compute power they need to accelerate AI experimentation – whether on-premises or cloud. Run:AI’s Kubernetes-based platform provides IT and MLOps with real-time visibility and control over scheduling and dynamic provisioning of GPUs – and gains of more than 2X in utilization of existing infrastructure. Run:AI recently announced a new Researcher UI for simpler jobs submission, as well as new AI technologies, ‘Thin GPU Provisioning’ and ‘Job Swapping’ which together completely automate the allocation and utilization of GPUs, bringing AI cluster utilization to near 100% and ensuring no resources are sitting idle. Learn more about Run:AI here.