Throughout the 25 years of my research and development of computer vision solutions, I have seen computer vision technology transform and grow at a rapid pace. When I started out, computer vision was a niche technology; months of hard work was required in order to apply the tech to simple applications. Today, it works seamlessly on several platforms and can be deployed on diverse applications.

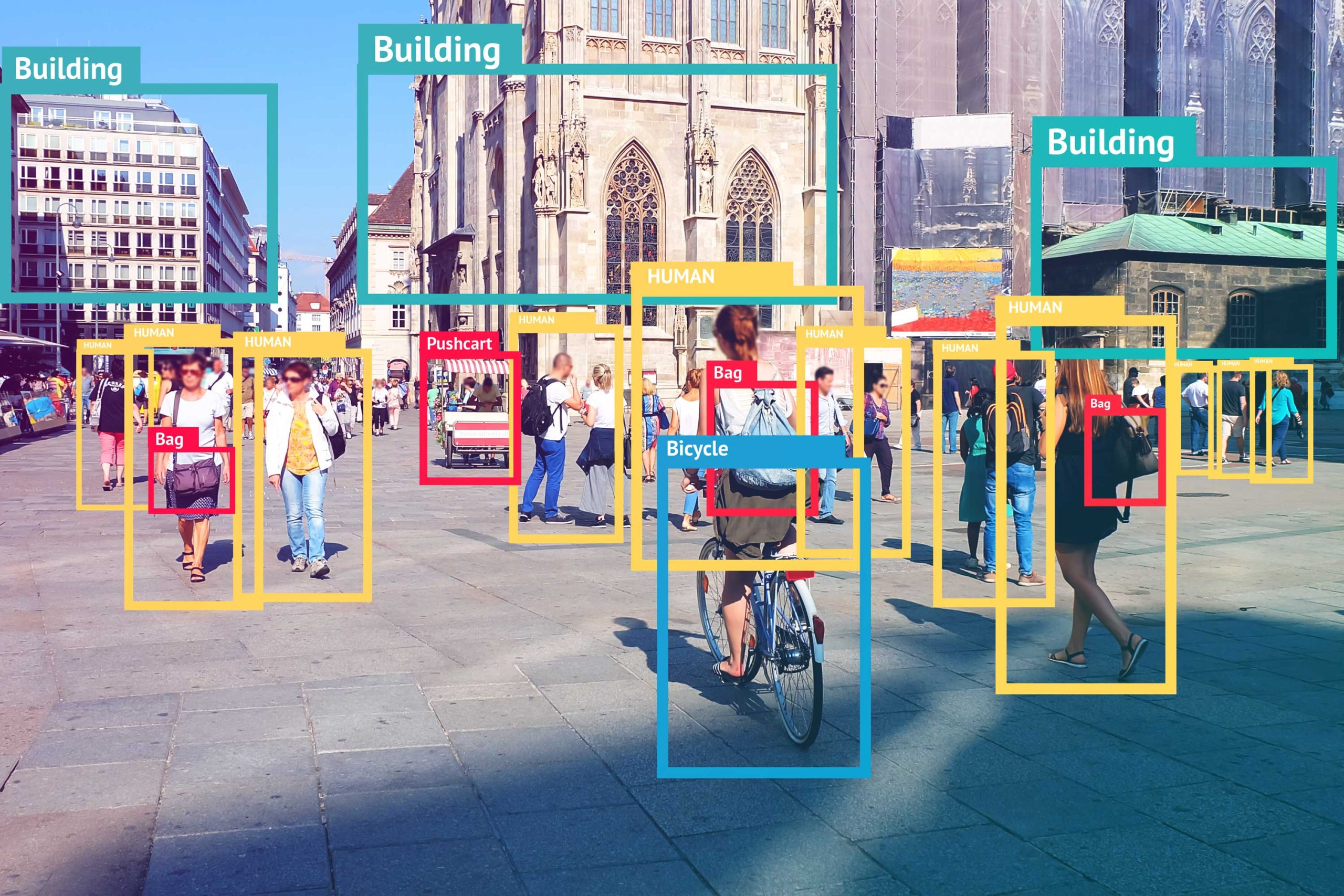

At Mobius Labs, we are enabling the super quick and easy application of computer vision technology to various industries in order to increase the value that they get from their visuals. It helps both enterprises that want to try this innovative technology for the first time and the ones that simply want to supercharge their existing services with very little investment. Using our solutions, you can find all physical and conceptual elements in images and videos, including individuals and their expressions. This aids in prioritizing content by how stylistically relevant it is to your needs.

Our Superhuman Vision solution is delivered in the form of a Software Development Kit (SDK), with some of the best image and video recognition features embedded in it. Its out-of-the-box, low-code training interface allows anybody to not only use the interface, but also train it without any prior knowledge of programming languages. The low-code interface concentrates on the elements that can be easily handled by non-technical users. Starting from product owners, to marketing heads and project managers, anyone can train new concepts, people or styles based on the ever-growing needs of their business and the preferences of their users.

Want to learn more about Superhuman Vision™ ?

{{cta(‘84063936-879b-4c5a-a0b9-fa1bf2b27430’)}}

Our SDK installs directly alongside your content for unparalleled privacy and speed: on-premise, on device, or in the cloud. Future projects aim to enable Windows and other OS installations. The technology is almost always installed locally; it works stand-alone and models as small as 7Mb can be directly deployed on hardware without any requirement for a network connection. The algorithms behind the computer vision models are superfast and detect objects at 52ms per image, allowing its easy installation and working on mobile phones and other edge devices. Newer versions of the technology can also be incorporated into space satellites that would be able to detect events like wildfires and port activities. The SDK ensures total data privacy and guarantees that user-data never leaves the client databases.

One of the applications that Superhuman Vision has powered across multiple organizations is its visual search feature. Photo archives that have been lying for ages get supercharged with the tech and can search for, identify and sort visuals with extremely detailed, subtle and wide variety of specifications. This powerful visual search coupled with the ability to create new custom concepts ensures that enterprises squeeze the maximum possible value from their new and existing visual data. We outperform the competition on accuracy and precision using very little training data.

For giving optimal value to our customers, our models run on commodity hardware, without them having to invest in additional hardware. Similarly, since we are a growing startup, we need to find ways to train models in budget constrained environments in order to keep a check on our finances. This forced us to explore models that are easy to train and maintain without having to spend multi-million dollars on hardware costs.

Another core pillar for us is to make computer vision accessible to people with no or limited programming skills. Underlying techniques that we use are instances of few-shot learning, which enables the user to train new computer vision classes with very less amount of training data, directly via an interface.

At Mobius Labs, we are in continuous research cycles to find the models that satisfy both these requirements. At a very top-level, there are 3 areas which we focus on. First is the question of data with which our models are trained, second, the architecture of networks and loss function used, and third, the overall efficiency. There is a constant set of key questions we ask ourselves, as summarized in the table below:

|

Data |

Algorithms |

Efficiency |

|

What kind of data should we collect ? How do you understand the data? Human thinking is non-linear, and any given selection is prone to biases. How do you sample the data? How do you evaluate & analyze the results ? How much data is enough ? How do you fill in data gaps? |

How do you pose the problem? How should the loss function be formulated? What do you look for in good feature representations? What is the best architecture? What is the network learning? How transferable is the feature representation? |

How do you make your networks faster? How do you make your networks smaller? Can we effectively transfer what we have learned in the past to more efficient models? |

Although these questions often have no easy answers, what makes our day job interesting is addressing them anyway. And with constant experimentations, we are discovering new and better methods to find their solutions. I would like to think, answering them is akin to Alice’s journey into the rabbit hole. With every discovery coming from the computer vision community and our team, it gets “Curiouser and curiouser!”

Ïn the past few years of working on these problems, many significant observations have emerged. I will include 3 such observations here:

- Noisy Training datasets can be your ally : Training on large enough data sets, albeit labels being noisy or incorrect, can often be a better option than training algorithms in a fully supervised set up. Fully supervised models sometimes overfit to a particular dataset. This can hamper generalizability. Whereas, label noise within a range can act as regularizer and build up a natural robustness.

- Transferability to new classes is a great thing to look for: Too often, people get stuck in evaluating per class accuracy. While this is important, the final utilitarian value remains constant within a range of difference (we had cases where a model with lesser accuracy was preferred by end users, since the mistakes it made resembled human mistakes and people were more forgiving). For us, more than per class accuracy, the ability of models to learn new classes (in a few-shot sense) is a much stronger value proposition, and something we actively look for.

- Inference usually requires less network capacity than training: A significant amount of model parameters are only necessary if we need to train a model from scratch, and is also tied to complexity of problems we are making the network learn. We are able to train models that require significantly lesser parameters (thereby contributing to lesser compute requirements with lower power drain) for doing inference by using techniques like distillation. Where we first train one or more large models from our datasets called the teacher models. We use a simpler model, called the student model, to mimic the behaviour of the teacher model, which is a simpler problem to learn.

The process of giving machines the power of human sight and the ability to extract valuable information from visuals is a challenging but fulfilling journey. In Alice’s words: “How puzzling all these changes are! I’m never sure what I’m going to be, from one minute to another.” It is this sense of adventure that accompanies our journey of developing a product that allows anybody and everybody to create innovative computer vision applications with ease and efficiency.

——-

Appu Shaji, CEO and Chief Scientist of Mobius Labs, has always been fascinated by the possibilities of computer vision technology. He completed his PhD and Post Doctoral in computer vision, and eventually formed his own company, Mobius Labs, 3 years ago.