Machine learning and AI models depend on a unique set of annotations that declare a particular subject in a specific representation. If you want your model to make accurate predictions, you need quality data. How do we define quality? Annotations or labels. At the same time, the data can be as diverse as image, video, or text. In this article, we will explore the ways this data is annotated by focusing on different types of annotations.

What is data annotation?

Data annotation is essential in building top-performing models. It can be described as labeling or annotating the available data in different formats so that it encloses the target object. This data is later used during training to help the model familiarize itself with the objects belonging to a predefined class and draw connections between what the model was fed vs. whatever it “sees” in real-time. When your model performs poorly, it’s either because of this data or the algorithm. With these annotations, you can further understand your model’s results, validate how the model performs, and gauge performance gains on a more granular level.

Annotation categories based on the format

Here are a few data types for annotation that you are likely to encounter when developing an AI model:

Image annotation

Image annotation mostly concerts annotating data that is either photographed or designed/illustrated. Moreover, it has to contain an object that you’re targeting. It’s important to note that you can also use public datasets for annotated images. This will save you tons of time, cutting down the process of data collection. Alternatively, you can produce or generate datasets on your own if you’re working on self-driving vehicles, for instance.

Text annotation

Whether you’re handling an entity system or dynamic analysis tool, text annotation will come in handy to help your model recognize critical words, phrases, sentences, and paragraphs in the text body. By deriving insights based on documents introduced, the model will soon replace manual document-heavy processes in banking, medicine, insurance, government, etc.

Audio annotation

Machine learning makes audio or speech easily understandable for machines. NLP-based speech models need audio annotation to make more practical applications such as chatbots or virtual assistant devices. These recorded sounds or speech add metadata to make effective and meaningful interactions for humans.

Video annotation

As the name goes, video annotation is a process where you can tag or label video clips for effective computer vision models to recognize objects. Annotating video can be more complicated and time-consuming compared to an image, as it involves multiple frames and lots of motion that needs to be captured with high accuracy.

Main types of data annotation

You can annotate your data in different ways, which is often determined based on your use case. When deciding how to annotate, it all comes down to asking yourself, “What is my data?” Even though, in essence, annotation means the same thing for every data type, techniques differ. For now, we’ll narrow it down to the most common types of image annotation:

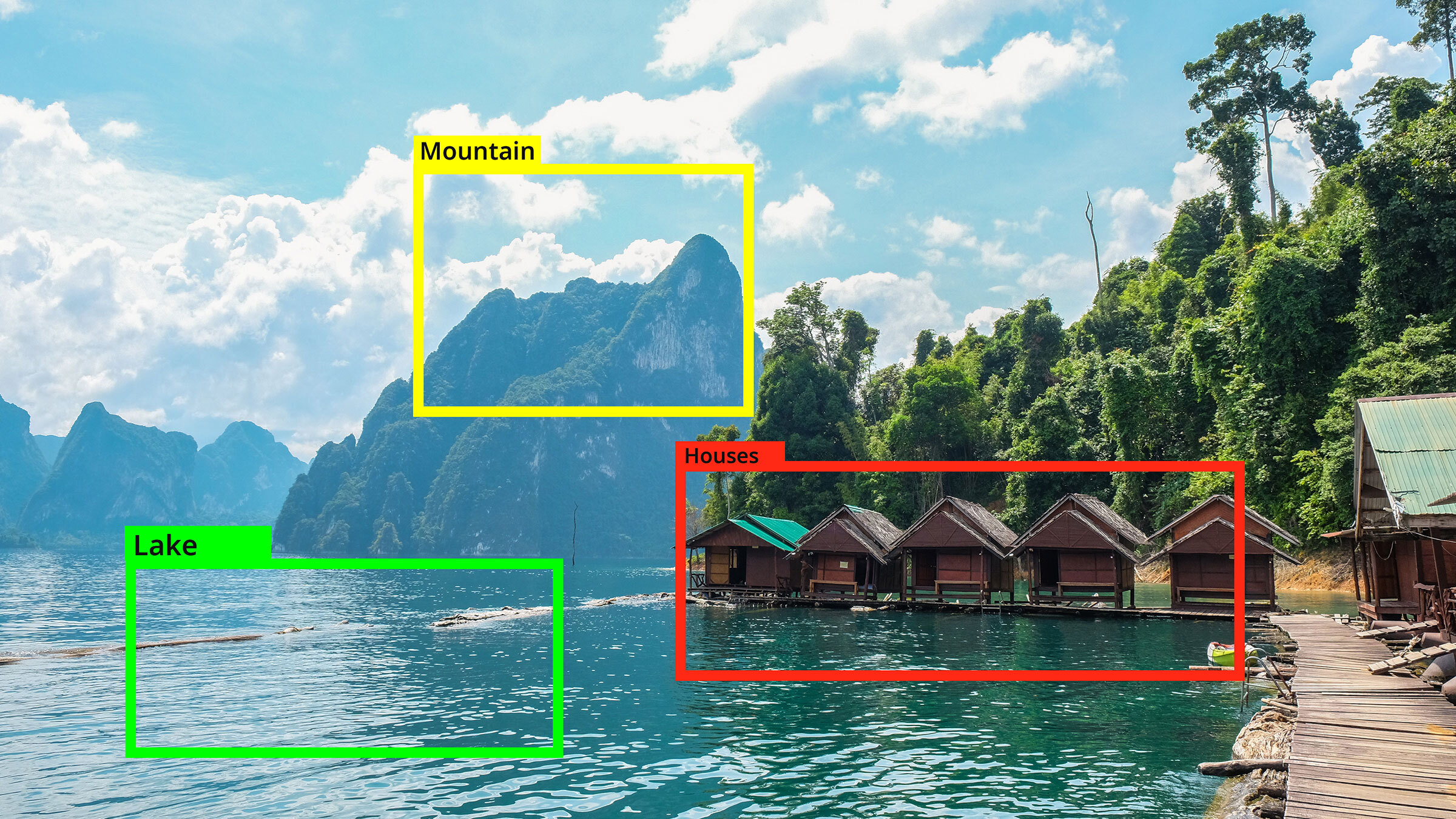

Bounding boxes

Bounding boxes are used to show the location of the object by drawing symmetrical rectangles around objects of interest. This helps algorithms recognize objects in an image and that information during predictions.

Polygons

Polygons are used to annotate the edges of objects that have an asymmetrical shape, such as rooftops, vegetation, and landmarks. You have more flexibility in deciding the shape with this one.

Polylines

Polylines are used to annotate line segments such as wires, lanes, and sidewalks. A common example is using a polyline for autonomous vehicles to detect lanes on the streets to drive accordingly.

Key-points

Key-points annotation is used to annotate small shapes and details by adding dots around the target object. Commonly, key-points are applied in projects that require annotating facial features, body parts, and poses.

3D cuboids

Similar to bounding boxes, this annotation type encloses the object in a rectangular body, which in this case is three-dimensional. Consequently, it also gives information about the objects’ height, length, and width, to provide a machine learning algorithm with a 3D representation of an image.

Semantic segmentation

Semantic segmentation is more complicated, as it involves dividing an image into clusters and assigning a label to every cluster. If you have an image with four people, semantic segmentation will classify all of them into a single cluster.

Instance segmentation

Unlike semantic segmentation, Instance segmentation identifies the existence, location, shape, and count of objects. So, in our previous example, each person will be counted as separate instances, even though they may be assigned the same label.

Final thoughts

In this article, we discussed what annotation is, its categories based on the format, and the types of annotation. If used properly, accurate annotations can boost your model and significantly impact its performance. The main things to consider when collecting and annotating data for your model, are its type, the volume, the external settings that may affect the quality of the data, as well as bias when deciding what data will serve your project best.